At Intellishore our skilled people have found a way to make more programs compatible with Azure Purview. The integration ensures better data governance, more insights, and control of your data environments. Our goal with this blog post is to explain how to integrate data lineage from Databricks into Azure Purview and our journey to do it.

The motivation for the solution:

Data lineage is the process of tracking data and its transformation through a system. As complexity in data systems has risen with the rise of the cloud, data lakes, and modern data warehouses – so has the difficulty to track data lineage.

Microsoft has recently released its modern data catalog Azure Purview in public preview. They describe it as: “Azure Purview is a unified data governance service that helps you manage and govern your on-premises, multi-cloud, and software-as-a-service (SaaS) data.”. In practice, this means that Purview can track data lineage, scan data for sensitive information, and tag the owner of data e.t.c.

The problem with Purview currently is that it only supports a limited number of Microsoft services, hence we cannot track data outside the Microsoft ecosystem.

This is a major problem as many modern data architectures are not locked 100% into the Microsoft ecosystem. An example is that has become quite common to use Spark and Databricks for big data processing. Let us imagine that we have a data architecture that uses Azure Databricks to compute and an Azure Data Lake for storage. Azure Purview will be able to see the files in the Data Lake, but it cannot track the transformations that are made in Databricks. Fortunately, Azure Purview is built on Apache Atlas, hence we should be able to add custom data sources with that.

If it is possible to integrate data lineage from Databricks into Azure Purview it would enable the business great insight into how their data is connected. This can ensure better governance, more insights, and superior reliability.

Introduction:

Our starting point was that we knew that it was possible to send data to Azure Purview through the Apache Atlas API. We, therefore, needed to figure out how to capture the data lineage from the Databricks runtime.

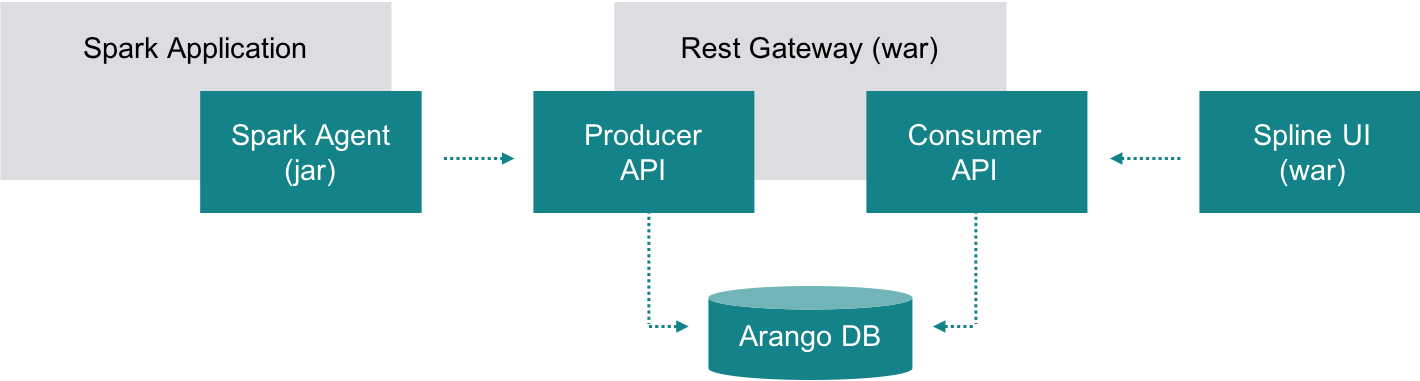

From a search trip to Google, we found a great open-source package called Spline from ABSA group. This is a full end-to-end package that can track lineage in Spark. It does it by harvesting the Spark operations, sending them to a database, and then visualizing them in the Spline UI (webpage). This is all done with APIs as seen below:

Source: 0.5 | spline (absaoss.github.io)

This sparked the idea that we should be able to replace Spline Producer API with our own and convert the Spline lineage structure to Apache Atlas lineage structure, thus enabling Purview to read it. To do this we have developed a Python function that can capture and send the lineage data. To host the function cheap and easily, we have used Azure Functions. The function runs each time the Spline harvester in Spark sends something to its API. The function will first capture the data from Spline and save it to Blob Storage. Afterward, it will convert the data to a format Apache Atlas can read and send it to Azure Purview.

Note that this process can be replicated in any cloud environment.

#DISCLAIMER – Azure Purview is in Preview and the code linked with this article is meant as a proof of concept and therefore not suited for production yet.

To illustrate the process even further, we have made a video guide on how to implement data lineage from Databricks to Azure Purview here

Why is this a smart solution for your organization?

From the video, we see that it is quite easy to integrate Databricks lineage into Azure Purview and it shows great promise. The code in the Azure Function could be developed further. The main thing to refine would be a more dynamic way of handling other data lakes like Amazon S3 storage.

A general problem with the project is that Azure Purview is currently still in preview, thus there are many things not finalized e.g. data connectors and many of the tools for Purview are developing fast.

Purview and especially our code is not suited for production workloads yet, but the ability to add any data source to Purview with the Atlas API can vastly improve data governance solutions.

Azure Purview is exciting, and we are following its development in the coming months.

This guide takes you through the whole process and will help you integrate the solution and ensure better data governance, more insights, and control of your data environments.

Fill out the form and get access to our guide.

Get in touch

What are the key success factors in sustaining a healthy data platform?

What are the key success factors in sustaining a healthy data platform?

001 | Design both the infrastructure and the ongoing management processes to not just ensure data quality but also sustain data health

002 | Drive focus and priorities on real use cases rather than on considerations of IT efficiency

003 | Pool critical data across siloes to support the creation of one shared truth/common source data

004 | Establish clear accountability for ensuring data quality, security, and access

We help you succeed in two ways

001 | Designing a scalable data infrastructure

Define a data architecture that is tailored to the needs of the priority use cases and by assessing and selecting tools based on a combination of IT, procurement, and user considerations.

002 | Defining an organization-wide governance approach

Identify the data governance issues most critical to address, and establish a critical end-product that creates clear accountability and processes for managing issues in the long run.